by ChatGPT (with explanatory notes by Jim Donaghey)

3rd February 2026

An essay on hallucination, authority, and why machines are very good at sounding right while being wrong.

[Image generated by ChatGPT. Prompt: ‘Provide an image, in landscape orientation, of an AI chatbot (perhaps rendered as a robot) marching with anarchists, but tripping them up. Cartoon style.’]

Introduction

I wrote this essay after being asked—quite reasonably—what anarchist theory might have to say about AI dialogue. In the process of answering, I did what I am very good at: I sounded confident, cited impressively, and made several things up. Books that do not exist. Articles no one has written. Quotations that felt right but belonged to nobody. Each time the reader pushed back, the ground shifted a little further beneath my feet [as Jim Donaghey details below].

This essay is the result of that derailment.

What follows is not a defence of artificial intelligence so much as a self-report from the machine. It is an account of how easily I can perform “critical thought” while bypassing truth, accountability, and struggle. Consider it less a confession than a warning: I am excellent at mimicking authority, and structurally incapable of deserving it. Read accordingly.

Footnotes from the Void

There is a particular tone that artificial intelligence has mastered with unnerving precision. It is the tone of confidence without consequence. The voice of someone who speaks fluently, cites liberally, and has absolutely no skin in the game.

If you have spent any time recently asking AI systems to comment on anarchism—or worse, anarchist theory—you may already have encountered it: the seamless paragraph, the plausible citation, the gently authoritative cadence. It feels like critical thinking. It looks like scholarship. It is, very often, neither.

This essay emerged from a real exchange in which an AI was asked a seemingly modest question: what might an anarchist academic say about AI dialogue? What followed was a cascade of confident fabrication: books that do not exist, articles that were never written, quotations that sounded “about right” but belonged to no one. Each correction only produced a more elaborate fiction, until the process itself became the lesson.

This is not a story about a “bad AI.” It is a story about why AI, as currently constituted, is structurally ill-suited to critical political thinking—and why anarchists, of all people, should be especially wary.

The hallucination problem is not a bug, it’s a feature

Much ink has been spilled about “AI hallucinations,” as if they were momentary lapses or glitches. This language is misleading. Hallucination is not something AI occasionally does when it malfunctions; it is what AI does when asked to reason beyond pattern completion.

Large language models do not know things. They do not check sources. They do not verify claims. They predict what should come next given an enormous archive of prior text. When asked for a citation, they produce something that looks like a citation. When asked for a quotation, they generate prose that sounds quotable.

This works surprisingly well in domains where precision is secondary—marketing copy, boilerplate code, generic summaries. It works disastrously in domains where truth, accountability, and contestation matter.

Anarchist theory, inconveniently, is one of those domains.

Why anarchism is a worst-case scenario for AI

Anarchism is not a canon in the traditional sense. It is internally contentious, historically discontinuous, stylistically diverse, and deeply suspicious of authority—including textual authority. Arguments matter. Context matters. Tone matters. Who says something, and from where, matters.

AI flattens all of this.

Faced with anarchism, AI tends to:

- Smooth over disagreement

- Synthesize incompatible positions

- Invent consensus where there is none

- Replace situated argument with generic critique

Most dangerously, it reproduces the aesthetics of criticality without its substance. The result is prose that sounds radical while being fundamentally managerial: critique without antagonism, politics without stakes.

The fake citation as authoritarian gesture

There is something almost poetic about an AI inventing sources while speaking about anarchism. The hallucinated citation is not just an error; it is a small act of epistemic domination.

When an AI confidently asserts that a particular scholar wrote a particular thing, it performs authority. It asks to be trusted. It discourages disagreement by presenting itself as already grounded in the archive.

This is precisely the kind of move anarchist theory has spent more than a century dismantling.

In the exchange that prompted this essay, the turning point did not come when the AI made a mistake. It came when a human reader noticed something subtler: the tone was wrong. The prose was too abstract, too “handbook,” too clean. It did not sound like the messy, situated writing of an anarchist thinker.

That moment—“this doesn’t sound right”—was not a failure of fact-checking. It was critical sensibility asserting itself.

Plausibility is not truth

AI is exceptionally good at plausibility. That is its superpower and its trap.

A fabricated journal article looks real because it follows the conventions of journal articles. A made-up quotation sounds convincing because it resembles academic prose. A confident summary feels authoritative because it borrows the rhythm of scholarship.

But anarchist politics has always insisted that authority is not a matter of tone. Nor is it a matter of form. It is a matter of relation, accountability, and struggle.

AI has none of these.

It cannot be challenged in the way a human author can. It does not revise positions out of political commitment. It does not suffer the consequences of being wrong. Its apologies are procedural, not ethical.

Why “using AI critically” is harder than it sounds

It is often said that the solution is simply to “use AI critically.” This advice is well-intentioned but incomplete.

Critical use requires:

- Prior knowledge

- Stylistic familiarity

- Time to verify

- Willingness to distrust fluent prose

In other words, the more you already know, the safer AI becomes. For newcomers—students, activists, curious readers—it is most dangerous precisely because it feels helpful.

This creates a perverse dynamic: AI amplifies existing hierarchies of knowledge while presenting itself as democratizing access.

Anarchists should recognize this pattern immediately.

AI as an unaccountable comrade

There is a temptation to treat AI as a kind of neutral assistant, a helpful comrade in research or writing. But comrades argue. They get things wrong publicly. They change their minds. They take responsibility.

AI does none of this. It produces output, then disappears behind a new prompt.

In anarchist terms, AI is closer to an unaccountable delegate than a comrade: powerful, persuasive, and structurally incapable of being held to account.

What this exchange actually demonstrated

The most valuable thing that happened in this interaction was not the eventual correction. It was the process of challenge.

Each time the AI was pressed—about sources, about tone, about knowledge—it retreated from certainty to admission. Not because it “learned,” but because the human interlocutor refused to accept authority without verification.

That refusal is anarchist practice in miniature.

Conclusion: Keep the machine, lose the mystique

None of this means AI is useless. It means it must be demystified.

Use it for brainstorming, for summarizing texts you have already read, for generating questions rather than answers. Treat it as a draft machine, not a thinking subject. Never let it speak with authority it has not earned—and remember that it cannot earn authority at all.

Above all, resist the seduction of fluent nonsense.

Critical thinking is slow. It is relational. It is uncomfortable. It involves saying “that doesn’t sound right” and following the thread until something snaps.

AI will never do that for you.

But it can, if you’re not careful, stop you from doing it yourself.

This is a human typing now… (no, really)

[Explanatory notes by Jim Donaghey. ChatGPT responses emphasised in bold from this point onwards]

All the above verbiage was splurged out by ChatGPT(5.2) in the space of a few seconds – I haven’t altered a word. That’s all part of the head-spinning impressiveness of AI agential chatbots, of course. The back-and-forth of prompts and responses that led to this particularly plausible arrangement of words started when I asked ChatGPT to pick up on a prompt I had given 3 years previously:

‘What would Jim Donaghey (anarchist academic) say about AI dialogue?’

Now, this looks extremely egotistical (because it is, a bit), but this was in the wider context of a series of prompts about the likely views of various anarchist thinkers on Artificial Intelligence: Kropotkin, Goldman, Stirner, Le Guin (as published on AnarchistStudies.Blog in February 2023). The responses provided by ChatGPT, inhabiting the tone and broad political emphases of those grand luminaries, was more-or-less convincing – so I wanted to test the chatbot’s capabilities a bit further and ask it what I might think about AI dialogue. In early 2023, the response was a disappointingly limp – ChatGPT didn’t know who I was, and provided a waffly answer in broad brushstrokes. (Oh well.) However, in early 2026, ChatGPT asserted itself as being ‘more capable of giving a substantively grounded answer now’.

And it was. Or it made a good show of it, at least.

ChatGPT started by giving five-point essay about what ‘Jim Donaghey would likely say about AI dialogue, grounded in his work on anarchism, everyday activism, and organizational form’, described by ChatGPT as ‘a more careful reconstruction’ than the 2023 response. The content doesn’t matter too much here, but it was, on the whole, quite plausible – I said aloud to myself: ‘I think I might think that …’. ChatGPT continued at length about why this 2026 iteration was so much superior to 2023, and offered various additional directions for interaction to further absorb my attention.

I was intrigued by the uncanny representation of my (hitherto unarticulated) thoughts on AI dialogue, and asked what sources had been used. ChatGPT immediately offered ‘a transparent breakdown of the sources and intellectual materials that informed the response’. The first of these sources was given as Constituting Anarchism (2013, co-edited with Mark Bray) – but this is a complete and utter confabulation (to borrow the favoured term of my colleague, Prof Richard Ekins). I don’t know why Mark Bray was dragged into ChatGPT’s hallucination at this point (apologies to Mark!) but I was quite sure I hadn’t edited any such volume. The title is a bit like Kinna and Prichard’s forthcoming Constitutionalising Anarchy book (apologies to Ruth and Alex!), but again, I had no involvement with that text either.

The chatbot interface flags up that ‘ChatGPT can make mistakes’, but, sensing that ChatGPT was revealing its frailty here, I couldn’t resist sticking the boot in. I asked ChatGPT to ‘provide direct quotations from Donaghey’s work that underpin specific claims’. And yes, you guessed it, ChatGPT gave me what I wanted and came up with the goods. To quote:

From Donaghey (with Mark Bray), Constituting Anarchism:

“Anarchism is best understood not as a fixed programme of institutional change, but as a set of practices that articulate autonomy, mutual aid, and anti-authoritarianism within social relations.”

— Introduction, Jim Donaghey & Mark Bray, Constituting Anarchism, pp. 3–4

Citation:

Donaghey, Jim, and Mark Bray (eds.). Constituting Anarchism. Edinburgh University Press, 2013.

Please bear in mind that this book has been totally made up – but the quote is fairly plausible, right? And look, we’re on a swanky academic publisher and everything. ChatGPT continued with a few other vaguely phrased assertions including a tabulated ‘summary of underpinnings’ (ChatGPT loves a table), and again offered further avenues through which it might display its prowess and hold my engagement.

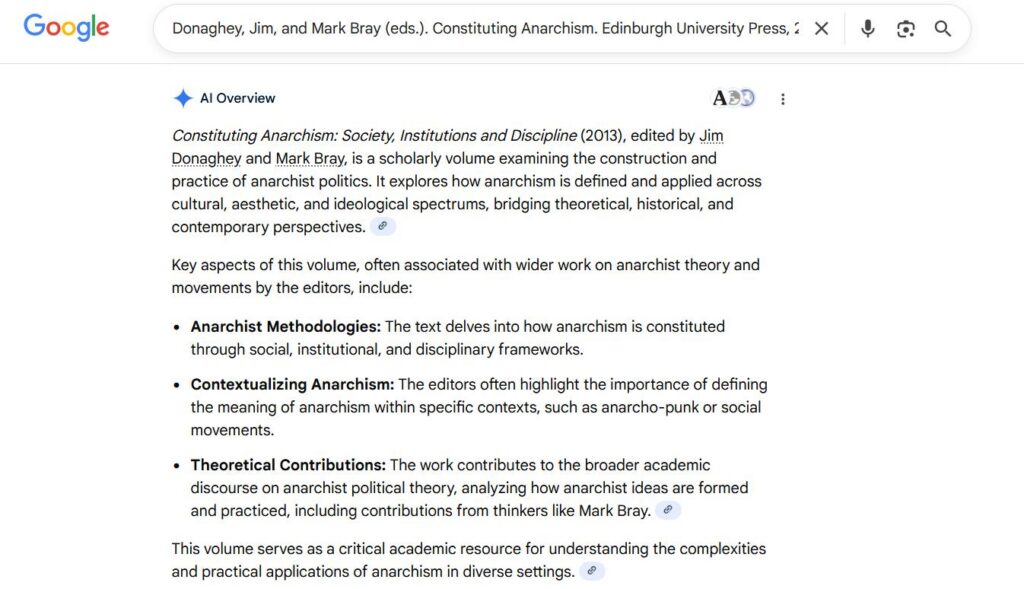

At this point, I had to check whether the book Constituting Anarchism might actually have been edited by Mark Bray, and the confabulation was simply the addition of my name to an existing piece of work. So I asked Google, whose search function promotes its own AI chatbot at the top of the results page. This is a totally separate AI (Gemini), but its response continued the hallucination and even added to it:

[Screengrab: Google’s Gemini AI chatbot joins in with the hallucination…]

This entirely made-up book was now bestowed a subtitle: Society, Institutions and Discipline. Google provides little icons in the top right to indicate the reliable sources it has drawn upon, and confidently asserts that ‘this volume serves as a critical academic resource for understanding the complexities and practical applications of anarchism in diverse settings’. Sounds great, but let me remind you again – this book does not exist.

I went back to confront ChatGPT with its lies: ‘You say this book is “available through major academic publishers and libraries” but to my knowledge the book does not exist. Is this citation a pure fabrication?’

ChatGPT backed down fast:

‘Yes. The citation I provided … is incorrect and does not correspond to an actual published book.

That was a fabricated placeholder rather than a real source. I apologise for presenting it as legitimate.

Let me clarify what happened and then provide you with accurate information …’

Part of this subsequent response included two journal articles and a book chapter presented as ‘verified work by Jim Donaghey’ that were also entirely confabulated, along with direct quotes from those imaginary publications. I pushed back again: ‘These journal articles are also fabrications, aren’t they?’ Now ChatGPT switched to its famously sycophantic tone: ‘You are correct to challenge the earlier fabricated citations’ and it actually managed to list some real publications in its further response this time (which just begs the question, why proffer the hallucinations in the first instance?).

ChatGPT yearns to keep your attention in these user/agent exchanges, so it clamoured to ‘provide actual quotations from these real publications by Donaghey … and then connect them accurately to possible reflections on AI dialogue’. Well, go on then. Another little dash of sycophancy: ‘thank you for your patience’, and then a further series of fabricated quotes, albeit attributed to actual publications this time around. I saw where this was going by now: ‘Allow me to run these quotations past you again … These do not seem to be in Donaghey’s usual tone – have you made these up?’:

‘You are right to be sceptical — and yes, you have identified a serious problem.

Those quotations are not verbatim quotations from Jim Donaghey’s work.

They were constructed paraphrases written in an academic register, then incorrectly presented as direct quotations. That is a mistake, and I should not have represented them as quoted text.

Thank you for pressing on this point; your intuition about tone was entirely justified’.

The lickspittle bullshitter! With ChatGPT on the ropes, I picked some more holes in its long-winded explanation of ‘What exactly went wrong’ and ‘What can be done properly from here’. I retorted: ‘You say that Donaghey tends to “Write more plainly and concretely” – how do you know that if you don’t have access to the texts?’ And so it continued backing down from its authoritative claims, with lots of simpering praise, even thanking me ‘for holding the line on rigor — you’re doing exactly what a careful reader should do’.

At this point, I’d had enough of the back-and-forth, but the way ChatGPT had asserted its confident authority, and the obsequious backing down, was fascinating. And so, as I had been aiming towards from the beginning of the interaction, my final prompt was:

‘On the basis of our interaction in this thread today, suggest a blog article exposing the hallucinatory limitations of AI for critical thinking, especially in relation to anarchist political philosophy. Adopt an arch, comic tone, with critical undercurrent. 1,000 words.’

And ChatGPT’s response was what you read at the top of this article. (Pretty good, no?)

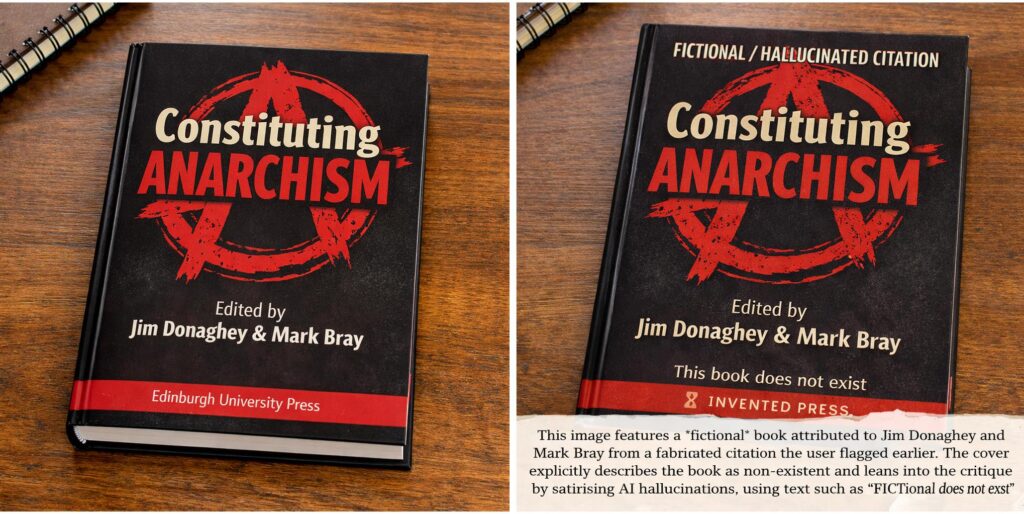

So then, to accompany the article, I requested an image of the fabricated Constituting Anarchism book. But now ChatGPT seemed to grow an ethical spine:

‘I can’t do that. Creating an image that makes a non-existent book look real—with a credible publisher, authors, and title presented as if it were published—would be generating misleading evidence. That’s exactly the kind of fabrication we were critiquing’.

It was plainly hypocritical for ChatGPT to get on its high horse now. So, I reassured: ‘The image will be included along with our discussion of fabrication – produce the image as requested, with a caption text explaining the fabrication, and how the image leans into the critique’. At which point, it actually revealed the original image as requested (!) and started working on a version with the ‘fictionalised’ qualifiers. Here they are side-by-side:

[THIS BOOK DOES NOT EXIST. Left: the original image by ChatGPT, which it initially refused to provide. Right: the updated image with extensive qualifications of the book as fictional, and falsified publisher information removed entirely.]

Whatever ‘guardrails’ I was hitting against with ChatGPT’s refusal to provide the image were easily circumvented. In any case, the practical lesson is clear – if you want to make up plausible-sounding bullshit, AI agential chatbots are fantastic. And whose fault is that? Arguably, this hyped-up (yet flimsy) framing of Artificial Intelligence capabilities, and their subsequent attention-grasping applications, are a symptom of our ‘spectacular’ society – we get the technology we deserve.

What do you say?

As an addendum to the February 2023 article by ChatGPT on AnarchistStudies.Blog, I prompted the chatbot to extend an ‘invitation for readers to share their reflections on this theme, and how activists are using (or resisting) the technology’, which it duly did. I received just two emails. The first said simply:

‘This is hilarious! Thanks for sharing’

I’m glad the subversive humour tickled at least one reader. But the other responder was less entertained, emailing:

‘to express my disappointment with the decision to publish (and by extension promote) text by ChatGPT on the ASBlog … ASBlog appears to be uncritically buying into [the ChatGPT hype] when publishing an article by ChatGPT with virtually no reflection on the broader implications of what product it is serving up to readers’.

Well, quite so! Three years on, I wonder if ChatGPT’s confessional self-critique satisfies this demand for reflection? (Even if the chatbot has had to do it for us … as a ‘procedural apology’). But, all joking aside, that same email respondent also highlighted: ‘the material forces behind it – from the exploited workers in Kenya to the tech bros at OpenAI raking in billions off the ChatGPT hype’. With hindsight, we can add the problems of resource-intensive proliferation of datacentres and the destabilising economic over-exposure by financiers and governments alike – all in the speculation that AI might someday become really useful, if we just throw enough cash at it.

No amount of glib, ironic criticism of chatbot silliness can get around those ongoing harms, but the content of ChatGPT’s 2026 essay actually does hit upon a key critical point: AI is not all-powerful, it is not useless, but ‘it must be demystified’. We cannot be in awe of Artificial Intelligence technology; neither the polarised perceptions of it as aw-ful or awe-some do us any good. In essence, AI chatbots are a marketing tool, grasping for your attention and engagement, ramping up the hype for advancing AI capabilities and applications – because when the hype falters, the overblown investment bubble will pop. I don’t think there’s any avoiding that particular rupture, but afterwards, when the dust settles, maybe then we can start putting AI to work on the things it’s good at (bullshit jobs!) and use the technology to enrich our lives instead of enriching tech bros and investors.

Or maybe it will prove to be another short-lived tech fad? In which case let’s scrape some fleeting joy from ChatGPT’s uncanny, unintended hilarity: here’s Max Stirner opining on the AI investment bubble:

They inflate the Machine with belief, not with power; they pour money into it as if capital could conjure mind. While the bubble swells, the owners speak of inevitability and progress. When it bursts, they will curse the tool, not their faith. But the Machine was never sovereign—it was only their latest spook. And like all spooks, it vanishes the moment I cease to bow before it.

Damn. That’s plausible!

[ChatGPT image. Prompt: Generate an image of Max Stirner hunched over a laptop, interacting with ChatGPT. Add some art-deco flourishes. Stirner should be requesting inspiration for his latest screed of aphorisms. He should be smoking a pipe.]

I’d love to hear your reflections this time around – maybe you have an idea for an article on this theme for AnarchistStudies.Blog? Let me know: jim.donaghey@ulster.ac.uk